Summary

The new Log Analytics query language contains a host of new keywords, statements, functions, and operators, making it easier than ever to do more with your data. Today’s focus is on the new “parse” keyword which allows a user to extract multiple custom fields from their data dynamically during a query, enabling users to easily break apart compound datasets and analyze the data within.

Introduction

As discussed in my previous post, OMS Log Analytics has recently undergone a major upgrade under the hood, which has brought with it a range of new tools and functionality. Much of that new functionality is tied to the new query language (“Kusto” for those who like codewords) which provides a wealth of new capabilities for data analysis.

Today we want to look at one in particular of the new operators in the new query language, “Parse”. Per the official documentation on the command (link), this can be used to “extend a table by using multiple extract applications on the same string expression”. In other words, “parse” enables a user to extract custom fields from collected data on-the-fly, at the time of query execution, and then use those custom fields later in the query for data analysis just as they would any directly-captured data.

Before we demonstrate exactly how this works, let’s take a look at both the raw data in OMS Log Analytics and the traditional means of using custom fields in OMS Log Analytics. This will enable us to demonstrate the specific benefits of the “parse” keyword over the traditional method.

Setting the Stage – The Data Structure

When OMS Log Analytics collects data, the structure of that data is dependent upon the how the data source supplies it – Log Analytics doesn’t know on its own how or when to extract data out of fields that were merged in the source system. A great example of this is Windows Event Log data. As you are likely aware, Event Log entries contain two properties under the hood, “ParameterXml” and “EventData” which contain Xml-structured data about the specific instance of an Event. This data is then formatted into the Event Description string for easy human consumption, but there aren’t dedicated property values for every possible Event data point for every possible Event. So when Log Analytics consumes that data, the structure of that data matches the source structure and we get fields for “ParameterXml” and “EventData”.

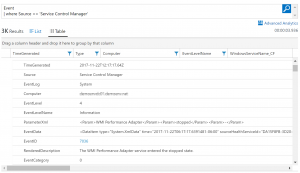

Let’s visualize this with a specific example. When a Windows Service starts or stops, an Event Id 7036 from the source “Service Control Manager” is stored within the Event Log. Each of these events contains three parameters, including the name of the service and the service state. We can collect that data with Log Analytics and present it with a simple query, like this:

Event | where Source == 'Service Control Manager'

In that image you can see the “ParameterXml” and “EventData” fields and their values. By default Log Analytics doesn’t extract any separate fields from those fields, we just get the raw data from the source system. Writing a query to do analysis on specific services, service states, or combinations thereof while using the raw data would get complex quickly and be error-prone as we use wildcards and other options in the query. Acknowledging this issue, Log Analytics added a feature a while back called “Custom Fields” designed to solve this problem.

Custom Fields – the Old Way

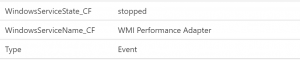

The original Custom Fields feature within Log Analytics is very useful and powerful, enabling Log Analytics to extract new fields out of large, complex data using saved queries and pattern matching. Administrators can identify the records they wanted to parse and define the custom field rules, after which when new data of the specified type comes in to Log Analytics, it automatically extracts the fields and makes “new” data available to users. Using this method, it is very easy to look at the records returned by the example query above and create custom fields for the Windows Service Name and Windows Service State, as can be seen in this image:

You will notice that each custom field created this way has the suffix “_CF” applied to the end of its name – this is because defining a custom field directly extends the database under the hood. In order to ensure that future official solutions don’t break custom fields, and vice versa, each custom field is “tagged” to make it readily apparent. This approach was and is useful, but it does come with two key limitations.

First, custom fields created in this manner can only be created by administrators. That means if you have non-administrator users for Log Analytics who are working on analyzing their datasets, in order to get the data extracted that they may need to perform their analysis, they’ll have to contact an administrator and go through the appropriate change control process in order to get the fields created before they can be analyzed. Change control is very important, but this also means that it will take more time for the users to complete their tasks, meaning the organization may be slower to get the analysis it needs to respond to the data insights.

Second, it adds logistical issues with design and with sharing queries. Because the Custom Fields process extends the database, no two custom fields can be configured with the same name. Additionally, since it is a custom, workspace-wide change that has to be implemented for each workspace, any queries leveraging that data now only work for that workspace (and others who happen to use the same names for the custom fields). This makes it harder to share queries and requires more effort to ensure that field names are both descriptive and not a potential hazard for future data and future custom fields.

Fortunately, the new query language solves both of those problems by adding the “Parse” operator.

Using the Parse Operator

The “Parse” operator (full documentation here) enables the best of both worlds – any user can, on the fly, extract data from other fields, assigning them to “custom fields” within the query, which can then be used for further analysis immediately. There is no need for an administrator to make a global change, there are no database changes made, and best of all, there is no incompatibility with other queries and other workspaces. Since the data extraction happens at time of execution, all that is needed is for the raw data to be present and the custom data can be extracted and used.

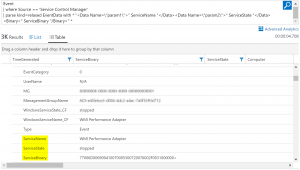

The “Parse” keyword works by providing it with a source string which demonstrates which parts of the string should be extracted as which columns. Using our example of the Windows Events for service state changes above, we can create the source string, or expression, using the raw EventData field, identifying where the data we want to extract is located. The following query demonstrates this, extracting three properties from the EventData field – “ServiceName”, “ServiceState”, and “ServiceBinary”:

Event | where Source == 'Service Control Manager' | parse kind=relaxed EventData with * "<Data Name=\"param1\">" ServiceName "</Data><Data Name=\"param2\">" ServiceState "</Data><Binary>" ServiceBinary "/Binary>" *

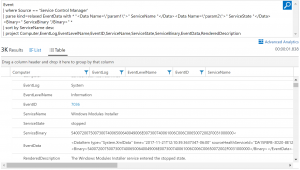

In the image, you can see how the output of the query contains our three parsed fields – no “_CF” suffix, no need for an administrator to make changes, they are simply there just like any other field. We can then extend our query to use that extracted data. Here is a simple example, wherein we sort the output data based on the “ServiceName” parsed field, then filter the output fields to only show a selection of the full data:

Event | where Source == 'Service Control Manager' | parse kind=relaxed EventData with * "<Data Name=\"param1\">" ServiceName "</Data><Data Name=\"param2\">" ServiceState "</Data><Binary>" ServiceBinary "/Binary>" * | sort by ServiceName desc | project Computer,EventLog,EventLevelName,EventID,ServiceName,ServiceState,ServiceBinary,EventData,RenderedDescription

The “Parse” operator enables any user to extract data on the fly and use it however they need, decreasing the time between data collection and analysis results by providing flexibility and removing administrative overhead. I strongly recommend everyone read the full documentation on it and see how they can start using it today to do more with their data.

Conclusion

The goal of this post is to discuss OMS Log Analytics’ new “Parse” keyword. This enables any user of Log Analytics to break apart complex string data into calculated columns at the time of query execution, meaning users can more easily process complex data without needing to rely on administrators to make workspace-wide changes. “Parse” comes with the recent OMS Log Analytics upgrade, alongside a host of other new query options. We’ll be looking more at the new query language’s capabilities in future posts, so stay tuned!